—

The global race for technological supremacy has entered a new, supercharged phase. It’s a contest measured not in military might or economic output alone, but in computational power—the raw ability to process information, simulate reality, and generate intelligence. In this high-stakes arena, the United States has just placed a monumental, billion-dollar wager. The Department of Energy (DOE), in a landmark collaboration with tech giants AMD, Hewlett Packard Enterprise (HPE), and Oracle, has greenlit the development of two revolutionary supercomputers at the legendary Oak Ridge National Laboratory (ORNL). Named Lux and Discovery, these systems represent more than just an upgrade; they signify a fundamental shift in how America will approach science, energy, and national security for the next decade and beyond.

This initiative is a direct response to a world where AI is no longer a niche field but the primary engine of innovation. From developing life-saving vaccines in record time to designing fusion reactors that could one day power our cities, the challenges of the 21st century demand a level of computational horsepower that was once the stuff of science fiction. Oak Ridge, already home to Frontier, the world’s first officially recognized exascale supercomputer, is being armed to lead this charge. Lux, arriving in 2026, is conceived as the nation’s first dedicated “AI factory,” while Discovery, slated for 2028, will push the boundaries of traditional scientific simulation to new, uncharted territories. Together, they form a two-pronged strategy to ensure American leadership in the very technology that will shape our future.

Lux: Forging Intelligence in a Digital Foundry

The traditional supercomputer is like a hyper-advanced calculator, capable of running complex simulations with breathtaking speed—modeling a supernova, simulating airflow over a jet wing, or predicting hurricane paths. Lux, however, is being built for a different purpose. The DOE has dubbed it America’s first “AI factory,” a term that brilliantly captures its mission: to manufacture intelligence. Instead of just crunching numbers, Lux’s primary role will be to train and refine the colossal foundation models that are the bedrock of modern artificial intelligence.

The A-to-Z of an AI Factory

Think of Lux not as a single machine, but as an end-to-end production line for creating powerful, specialized AI. Its architecture is being meticulously designed by AMD and HPE to handle the data-intensive, often chaotic workloads that define AI model training. This process involves feeding the system unfathomable amounts of data—from scientific literature and genomic sequences to satellite imagery and molecular structures—allowing the AI to learn patterns, relationships, and principles on its own. The “products” rolling off this assembly line will be highly sophisticated AI tools tailored for specific scientific domains. An AI trained on Lux could, for example, analyze millions of biological datasets to predict how a new virus might evolve, or sift through decades of climate data to design more resilient energy grids.

The potential applications are as vast as they are transformative:

- Materials Science: AI could design novel materials atom by atom, creating stronger, lighter alloys for aerospace or more efficient catalysts for clean energy production without years of trial-and-error in a physical lab.

- Personalized Medicine: By training a model on millions of anonymous patient records, genomic data, and treatment outcomes, Lux could help create AI that suggests hyper-personalized cancer treatments for individuals.

- Clean Energy: It could accelerate research into nuclear fusion by simulating the volatile plasma inside a reactor with unprecedented accuracy, or design next-generation battery chemistries for electric vehicles and grid storage.

This represents a paradigm shift from simulation to generation. Instead of just modeling what we know, Lux will help us discover what we don’t.

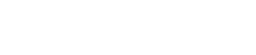

Under the Hood: The All-AMD Engine

At the heart of Lux is a tightly integrated ecosystem of AMD technology. The system will be powered by a massive array of AMD Instinct™ GPUs, the company’s flagship accelerators designed specifically for the parallel processing demands of AI. These GPUs will be paired with next-generation AMD EPYC™ CPUs, which will manage the data flow and system logic. Tying it all together will be AMD’s advanced networking hardware, ensuring that data can move between the thousands of processors at lightning speed, a critical bottleneck in many large-scale AI systems.

Dr. Lisa Su, the celebrated chair and CEO of AMD, underscored the mission’s gravity. “Discovery and Lux will use AMD’s high-performance and AI computing technologies to advance the most critical U.S. research priorities in science, energy, and medicine,” she stated, framing the project as a showcase for “the power of public-private partnership at its best.” This all-AMD architecture is a strategic choice, designed to create a seamless, optimized environment where hardware and software work in perfect harmony to tackle the unique challenges of AI at a national scale.

Discovery: Successor to a Titan, Pioneer of the Future

While Lux focuses on building AI, Discovery is being engineered to wield it. Planned as the direct successor to Frontier, Discovery’s mission is to take the raw power of exascale computing and make it smarter, faster, and more efficient. Frontier made history in 2022 by officially breaking the exascale barrier—performing over a quintillion (a billion billion) calculations per second. It was a monumental achievement, akin to breaking the sound barrier or landing on the moon. Discovery, set to arrive in 2028, is designed to not just surpass that milestone but to redefine what’s possible with that level of power.

Charting the Next Frontier of Scientific Simulation

Discovery will be the ultimate scientific instrument for the late 2020s and early 2030s. It will be the workhorse for America’s most ambitious research projects, running simulations of a scale and fidelity that are currently impossible. It will be built upon a future generation of AMD EPYC CPUs and Instinct GPUs, likely the MI430X or its successors, promising a significant leap in performance over its predecessor. ORNL Director Stephen Streiffer articulated the vision, noting that “the Discovery system will drive scientific innovation faster and farther than ever before,” empowering researchers to tackle problems that have long been beyond our computational grasp.

Imagine being able to simulate the entire life cycle of a star, model the intricate neural connections of a fruit fly’s brain in real-time, or create a “digital twin” of the entire U.S. power grid to test its vulnerability to cyberattacks or extreme weather. These are the kinds of grand challenges that Discovery will be built to address. It will expand the DOE’s ability to design everything from safer nuclear reactors to more efficient biofuels, all within a virtual environment, saving billions of dollars and years of physical experimentation.

The Specter of Power Consumption: The Green Computing Imperative

One of the most formidable challenges facing the world of supercomputing is its insatiable appetite for electricity. Frontier, for example, consumes roughly 21 megawatts of power under load, enough to power over 20,000 homes. As these machines become exponentially more powerful, their energy footprint threatens to become unsustainable. A key design mandate for Discovery is to dramatically increase computational efficiency—delivering more performance per watt.

The project’s engineers face the daunting task of boosting bandwidth, processing speed, and data-handling capabilities while keeping the system’s overall energy use roughly on par with Frontier’s. This is a monumental engineering challenge that requires innovations at every level, from the silicon chip to the data center’s cooling systems. Achieving this goal would be a breakthrough in itself, setting a new standard for sustainable high-performance computing and providing a blueprint for future data centers worldwide. The success or failure of this green objective will be a critical test for the project, as the long-term viability of next-generation AI and HPC depends on our ability to manage their environmental impact.

A New Model of Innovation: The Public-Private Nexus

This billion-dollar initiative is not simply a government procurement project; it’s a deeply integrated partnership that braids together the strengths of public institutions and private enterprise. The DOE and ORNL provide the strategic vision, the scientific direction, and the grand challenges that need solving. Meanwhile, companies like AMD, HPE, and Oracle bring the cutting-edge hardware, engineering expertise, and cloud infrastructure necessary to build these complex systems.

The Silicon Valley-Beltway Alliance

This model recognizes a simple reality: the pace of technological innovation in the private sector, particularly in semiconductors and AI, is far too rapid for the government to keep up with on its own. By partnering with AMD, the DOE gains access to a multi-billion-dollar R&D pipeline and a roadmap of future technologies. HPE, a veteran in building some of the world’s largest supercomputers, brings the systems integration and architectural expertise to assemble these thousands of components into a cohesive, functional whole.

U.S. Secretary of Energy Jennifer Granholm has emphasized this collaborative approach as essential for national competitiveness. “Winning the race for the technologies of the future requires a new era of partnership, bringing together the brightest minds from our national labs, universities, and the private sector,” a sentiment she has echoed in various forums. This project is the physical embodiment of that philosophy, a fusion of public mission and private innovation aimed at a common goal.

The Cloud Conundrum: Sovereignty in a Shared World

The inclusion of Oracle, a cloud computing giant, adds an interesting and complex layer to the partnership. Oracle’s role is to provide what it calls “sovereign, high-performance AI infrastructure” to support the co-development of the Lux cluster. This raises important questions about the future of national computing assets. How much of this critical infrastructure will reside on a private cloud platform versus being under direct federal control on-premise at Oak Ridge?

Mahesh Thiagarajan, an executive at Oracle Cloud Infrastructure, stated that the company is committed to delivering a “sovereign” solution. In this context, “sovereign” implies that the infrastructure will be physically located in the U.S., managed by U.S. personnel, and logically isolated to meet strict government security and data privacy requirements. However, the deep integration of a commercial cloud provider into a flagship national security and science asset is a relatively new development. It reflects a trend toward hybrid models but also invites scrutiny. Striking the right balance between leveraging the agility and scalability of the cloud while maintaining absolute control and security over sensitive government-funded research will be a critical challenge for the DOE to navigate as Lux comes online.

The stakes are immense. The models trained on Lux and the discoveries made on Discovery will be among the nation’s most valuable intellectual property. Ensuring their integrity and security is paramount, making the implementation of this public-private cloud partnership a closely watched experiment that could set a precedent for future national projects.

Source: https://www.techradar.com

0 Comments