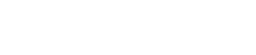

Hugging Face has rapidly emerged as a linchpin of the open AI ecosystem. Co-founder Thomas Wolf notes that “open-source is the key approach to democratize machine learning.” The company’s flagship Transformers library (42,000+ GitHub stars, ~1 million downloads per month) and Diffusers toolkit for generative models now underpin thousands of state-of-the-art AI systems. Today the Hugging Face Hub hosts a massive library. It contains over 1 million community-contributed models spanning NLP, vision, speech, and more. There are also 190,000 datasets and 55,000 demo apps (“Spaces”). This open catalog lets developers worldwide browse, fine-tune, and deploy AI models as easily as if they were browsing a “GitHub of machine learning.” It avoids the vendor lock-in of black-box APIs. In short, Hugging Face’s mission of “advancing and democratizing AI” through open-source tools is playing out on a global scale.

HUGS (Oct 2024): Hugging Face introduced HUGS – zero-configuration inference microservices. This simplifies running open LLMs in any data center. HUGS is optimized for NVIDIA/AMD GPUs (and soon AWS Inferentia, Google TPUs). It exposes an OpenAI-compatible API as a drop-in replacement. For developers seeking extreme on-prem AI performance, platforms like NVIDIA DGX Spark show how compact supercomputers can accelerate inference and model training at scale.

Google Cloud (Nov 2025): A deep partnership with Google Cloud now caches HF’s 2 million+ open models on Google’s CDN. This enabled 10× growth in usage over three years. Google works with HF to make Vertex AI, Kubernetes, and Cloud Run “the best place to build with open models.” Both companies use their storage and networking tech for faster downloads.

Azure Foundry (2025): Hugging Face models (over 1.7 million public models) are now curated into Microsoft’s Azure AI Foundry (formerly Azure ML) catalog. At Microsoft Build 2025, the companies announced tighter integration. Azure users can now design and deploy AI agents at scale using HF’s open models.

Dell Enterprise Hub (May 2024): Dell launched an on-premises Hugging Face portal. It provides customers with ready-to-run containers for popular LLMs (e.g., Meta’s Llama 3) on Dell hardware. Dell is Hugging Face’s first preferred on-prem infra partner. This brings the ease of the HF Hub to data centers worldwide.

Community Milestone (2025): By late 2025, the Hugging Face Hub had surpassed 2 million open-source models. This milestone was reached far faster than the first million (about 335 days vs. 1,000 days). Analysts predict 3 million models soon in 2026. This underscores the platform’s accelerating innovation.

Explosive Growth of the AI Community

Hugging Face’s user base and content have exploded in parallel. By late 2024 the platform counted over 5 million users and 100,000+ organizations, reflecting widespread adoption by startups, research labs, and enterprises. The community is global: contributions range from hobbyist NLP fine-tuners to large tech firms. As one HF analysis notes, the Hub is the “largest repository of AI models, datasets, and applications” capturing a diverse ecosystem of innovation. With low-resource and fine-tuned models surging (e.g. smaller LLM variants and domain-specific models), more developers can participate and iterate.

This momentum shows in raw numbers: HF’s model repository grew exponentially from 2022–2025. Over four years it added 2 million models, with the second million arriving in just 335 days. By mid-2025 the Hub already hosted ~1.8M models and 560,000 applications. Such growth means nearly two-thirds of the models were contributed in the last 18 months, a pace unseen in traditional closed-source development. Hugging Face even tested its community with HuggingChat, an open-chat platform: between 2023–2025 it served 1M+ users and over 100,000 AI “assistants” built on 20+ open models. These trends highlight a real appetite for transparent, customizable AI – and the vibrant HF community pushing innovation further than ever.

Enterprise Partnerships and Adoption

Major cloud and enterprise players are embedding Hugging Face directly into their AI platforms, making open models easier to deploy at scale.

Google Cloud integration

Google Cloud now integrates Hugging Face models into Vertex AI and other services. Usage of HF models on Google Cloud has increased 10× over the past three years, reaching tens of petabytes of monthly downloads. To support this growth, Google and Hugging Face are building a CDN gateway that caches HF model data on Google’s infrastructure, significantly speeding up model access and deployment. Learn more about these initiatives in Google Cloud’s 2026 push: new AI partnerships, tools, and infrastructure investments.

Microsoft Azure AI Foundry

Microsoft has taken a similar approach. Hugging Face’s model catalog is now a built-in component of Azure Machine Learning and Azure AI Foundry, allowing Azure customers to deploy over 11,000 popular open models with just a few clicks. This tight integration lowers the barrier to entry for enterprises adopting open-source AI.

On-Premises Infrastructure and Enterprise Deployment

Dell Enterprise Hub

Infrastructure vendors have also embraced Hugging Face. Dell launched the Enterprise Hub in May 2024, bringing the Hugging Face experience directly to on-premises environments. The platform offers secure, ready-to-run containers for popular LLMs, bundled with all required libraries, enabling organizations to deploy large models on Dell hardware with minimal setup.

Inference Endpoints and HUGS

These partnerships complement Hugging Face’s own enterprise tools. Inference Endpoints and HUGS turn any Hugging Face model into a scalable API within minutes. This allows even small teams to deploy production-grade AI features without managing complex machine learning infrastructure.

Open Models at Enterprise Scale

By collaborating with AWS, Google Cloud, Microsoft Azure, and hardware vendors, Hugging Face ensures that open models are accessible across every deployment scenario. From cloud-native startups to regulated, on-premises enterprises, organizations can adopt open AI without sacrificing performance, security, or scalability.

Open Collaboration vs. Closed AI

Hugging Face’s rise stands in contrast to the walled gardens of some big tech AI offerings. Players like OpenAI and Anthropic offer powerful models, but they do so through closed APIs. HF executives warn that outsourcing AI entirely to proprietary APIs can expose companies to hidden data and IP risks.

Instead, HF’s stack is built for transparency. Organizations can download models, fine-tune them on-prem, and keep their data in-house. This approach aligns with recent moves by major labs. For instance, Meta has open-sourced its LLaMA series and teamed up with HF on an AI startup accelerator in Europe. HF’s platform now hosts many of these open heavyweight models, including Llama 4, Meta’s Gemma series, and big Anthropic models. This empowers developers to mix and match sources.

Big tech companies are also cooperating with Hugging Face to bolster open innovation. Google’s Ryan Salva praises HF for enabling companies to “access, use and customize now more than 2 million open models.” He notes that Google is contributing its own 1,000+ open models back to the community. Meta’s vice president for Europe highlighted that FAIR’s new startup program gives teams access to Hugging Face tools and models alongside Meta’s research.

In short, HF has positioned itself as the open-ecosystem complement to proprietary AI. It provides a neutral ground where models from OpenAI competitors, academic labs, and startups coexist. This “whatever works best” strategy challenges the status quo. It enables users to avoid vendor lock-in and prioritize privacy, sovereignty, or performance as needed.

Why Hugging Face Matters in 2026

As 2026 dawns, Hugging Face’s impact on AI is unmistakable. Its open-source libraries and Hub have set de facto standards for how to build and share AI. Analysts note that open-source momentum is now so strong that “the real story will be whether a new wave of open systems emerges that doesn’t just match proprietary models, but…moves beyond them.”

In other words, HF’s community-driven innovation may soon surpass what closed models alone can do. Backed by $4.5B in funding (2023) and a global contributor base, Hugging Face looks set to keep democratising AI. By allowing anyone to train, deploy, and combine models – from transformers to diffusion imagers – HF is fueling a more inclusive AI ecosystem.

Its collaborations with Google, Microsoft, Meta, and others underscore how central open models have become. For tech builders in early 2026, Hugging Face is not just a toolkit: it’s a thriving marketplace and community that’s reshaping where and how AI happens. It offers an alternative to the closed guardrails of big tech’s platforms.

In the broader AI landscape, Hugging Face stands as proof that openness and collaboration can accelerate innovation on a massive scale.

Trending Hugging Face Models Developers Are Using in 2026

Based on user engagement, likes, and featured projects on Hugging Face, these models and tools are gaining the most traction among developers in 2026:

- DeepSite v3 – a popular Vibe Coding tool that generates full applications from natural language prompts.

- Open LLM Leaderboard – a widely used benchmark for ranking and evaluating open-source LLMs.

- FLUX.1 [dev] – a powerful text-to-image generation model used in creative and commercial projects.

- AI Comic Factory – a creative AI tool for generating comics from a single prompt.

- MusicGen – an advanced text-to-music generation model developed by Meta.

- InstantID – image generation with strong face identity preservation.

- TRELLIS – scalable 3D generation from images for professional workflows.

- Hunyuan3D-2.0 – text-to-3D and image-to-3D generation for next-gen content creation.

Sources: Industry reports and news articles published 2024–2025 (Hugging Face blog posts, AI World analysis, AWS and Meta press releases.

FAQ – Hugging Face

Hugging Face is an open-source AI platform providing libraries like Transformers and Diffusers, a massive model hub, and tools for NLP, vision, and speech. Its Hub hosts millions of models, datasets, and demo apps, allowing developers to build, fine-tune, and deploy AI models without vendor lock-in.

Yes. Hugging Face supports multiple deployment options. Dell Enterprise Hub offers on-premises containers for popular LLMs, while cloud platforms like Google Cloud, Azure, AWS, and Vertex AI integrate HF models for scalable AI deployments.

HUGS is a zero-configuration inference microservice that runs open LLMs anywhere. It’s optimized for GPUs and other accelerators and exposes an OpenAI-compatible API, making it easy to deploy models on-premises or in the cloud without complex setup.

Hugging Face promotes transparency and collaboration. Users can download, fine-tune, and deploy models on-premises, mix models from different labs, and avoid vendor lock-in. Unlike proprietary APIs, HF allows privacy-conscious and performance-critical AI deployments while encouraging community innovation.

Hugging Face hosts millions of open-source AI models across NLP, vision, audio, and multimodal tasks, with the total growing rapidly year over year.

0 Comments