Over the past year, Hugging Face has quietly evolved from a popular model repository into core infrastructure for open AI development, providing the tooling, deployment layer, and neutral ground that much of the open-source AI ecosystem now depends on.

The company’s model hub now hosts around 2 million open models covering diverse tasks and languages, and its developer base has crossed 10 million users. Rather than a flashy consumer app, Hugging Face has become a critical infrastructure layer for the global open-source AI ecosystem, enabling anyone to train, deploy, or run models. It underpins collaborations with major cloud providers, including Google, Microsoft, and AWS, and sits at the heart of many modern AI stacks, quietly powering everything from enterprise systems to research labs.

From Model Hub to Infrastructure Provider

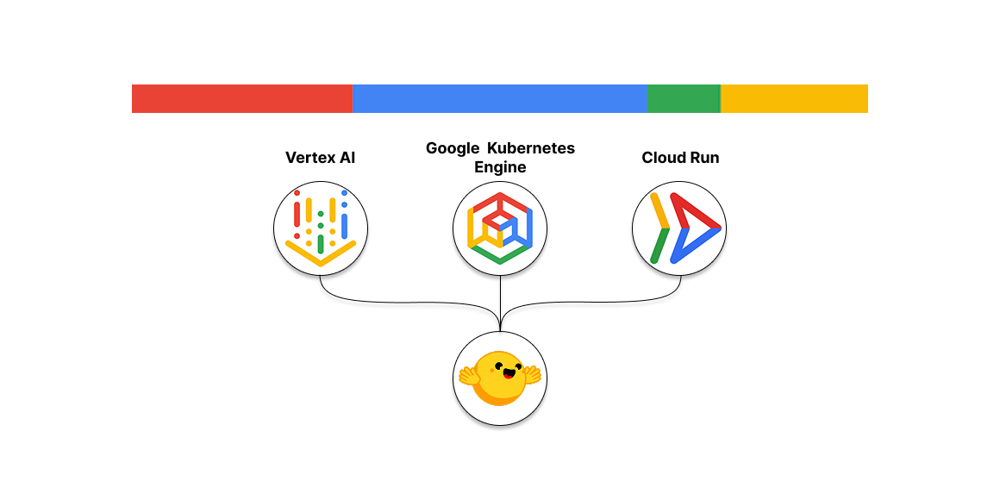

Hugging Face’s evolution into an infrastructure platform is evident in its deepening partnerships. Its collaboration with Microsoft and Google means Hugging Face models can be deployed on Azure AI Foundry and Google Cloud almost as easily as on the Hugging Face site. For example, usage of Hugging Face by Google Cloud customers has grown tenfold in three years, amounting to “tens of petabytes of model downloads every month, in billions of requests,” according to a Hugging Face blog. Through Vertex AI, Google Kubernetes Engine and Cloud Run, Google now offers out-of-the-box access to Hugging Face’s model library, even caching models at the edge for faster startup.

Microsoft likewise reports 10,000+ HF models preloaded for Azure customers, with ‘day-0 releases’ of new models and continuous security vetting of weights for enterprise use. In practice, this means Hugging Face has become a neutral layer: even OpenAI’s own open models (“GPT‑OSS” family) are served via Hugging Face’s Inference service. As one blog notes, “OpenAI GPT OSS models are accessible through Hugging Face’s Inference Providers service… the same infrastructure that powers OpenAI’s official demo”. This cross-platform approach reinforces HF’s role not just as a library of models, but as the back-end fabric for deploying and running them across clouds and data centers.

New Tools: Spaces, Endpoints and Enterprise Hub

Beyond partnerships, Hugging Face has rolled out richer developer tools. Its Spaces platform – a serverless environment for hosting interactive AI demos – surpassed half a million user-created apps as of mid-2024. A recent “Dev Mode” lets creators edit Space code directly via VS Code, speeding up iteration. More broadly, Spaces has become a kind of “App Store” for AI demos and small services, cementing HF’s reputation as “the GitHub of machine learning” (as VentureBeat observed) by giving the community an easy way to share and discover applications.

For production deployments, Hugging Face’s Inference Endpoints service has matured into an enterprise-grade offering. Customers can click from a model page to instantly spin up a hosted API endpoint (on CPUs or GPUs) with auto-scaling. These endpoints are fully managed – covering the cloud VMs, containers and orchestration – so companies can use open models in production without building their own infra. Through its enterprise plans and partnerships, HF also offers private model hosting and on‑prem options. For example, Dell’s new “Enterprise Hub” for Hugging Face provides turn‑key containers and scripts to run HF models on Dell servers in a company’s data center. Dell’s marketing materials even call HF “the leading open platform for AI builders”, noting that HF’s portal now extends to on-prem deployments and accelerators.

These advances – from interactive Spaces to production APIs – show HF’s shifting focus. It has grown from a community model hub into a full AI stack provider, supplying the compute and tooling usually associated with big tech clouds. In effect, Hugging Face now equips startups and enterprises with Netflix-style access to AI models, on par with Google, Microsoft or AWS offerings.

A Platform Among AI Giants

Hugging Face occupies a unique middle ground alongside industry giants. It does not primarily build proprietary AI services like OpenAI or Google, nor does it sell chips or cloud like AWS or NVIDIA. Instead, HF’s niche is enabling and aggregating open models from all sources. It hosts Meta’s LLaMA family, Google’s released Gemma models, Anthropic’s Claude Mini, AI21’s Jurassic, Chinese models like Qwen and DeepSeek, and now even OpenAI’s open-weight GPT-OSS series – all in one place.

This ubiquity makes HF “the gateway to open models”, as one HF blog put it. Because Hugging Face is model-agnostic, its platform naturally complements both open and closed ecosystems. For example, while Google pushes its Gemini models as proprietary APIs, it simultaneously integrates HF to offer Google users easy access to other open models on Vertex AI. Likewise, Microsoft’s Azure Foundry now features Hugging Face’s collection so Azure customers can pick the best model (open or closed) for their needs. In short, HF has become a common foundation layer in an AI landscape otherwise fractured by walled gardens.

Global investment and attention in AI remains dominated by U.S. tech firms. But open-source players are surging – especially internationally – and HF is a hub for that trend. As the Red Hat Developer report observes, 2025 saw an explosion of new open models from China (DeepSeek’s R1, Alibaba’s Qwen) and Europe (Mistral), with many equaling the performance of closed models. Hugging Face hosts most of these newcomers, letting any developer tap into them. In effect, HF bridges national ecosystems: Chinese labs, European initiatives (like Meta’s BLOOM), startups and academia all share and build on the same HF repository. This open exchange contrasts sharply with the secretive development at firms like OpenAI or Anthropic – underscoring HF’s strategic role as a global AI commons.

Why Open-Source AI Matters

Hugging Face’s rise mirrors a larger shift: governments, companies and developers are treating open-source AI as a strategic necessity. The White House’s 2025 AI Action Plan explicitly “encourage[s] open-source and open-weight AI,” calling such models of “unique value” for startups, businesses and government alike. Open models let organizations use AI without “being dependent on a closed model provider,” which is crucial if they handle sensitive data or require audits. The report even labels open AI as having “geostrategic value,” suggesting that leading open models (rooted in American values) could become global standards.

Globally, the trend toward “sovereign AI” is accelerating. A TechNode analysis notes that governments and regulated industries are investing in AI they can control and trust. European initiatives (the EU AI Act, Gaia-X) and national programs (ASEAN’s SEA-LION, UAE’s Falcon) all emphasize open frameworks and local data. Hugging Face fits this drive: its open platform gives countries and enterprises “full visibility” into model internals, enabling customized, compliant AI. As one expert piece points out, 41% of organizations now prefer open-source generative AI, citing transparency, cost and performance tuning as motivations.

For startups and enterprises, open-source AI also unlocks rapid innovation. Instead of licensing a closed LLM at high cost, a company can download an open model from HF, fine-tune it on proprietary data, and deploy anywhere. This flexibility is why even hardware vendors like NVIDIA are releasing open models (its “Nemotron” series) for edge and on-device AI. In practice, open models have become powerful enough to run on local devices or in-browser, making on-device AI assistants and robots feasible. In sum, the open ecosystem that Hugging Face curates is no longer peripheral — it has become central to AI strategies across sectors.

Real-World Impact: Agents, Robotics and Edge AI

The growth of HF’s platform is evident in emerging use cases. AI agents (multi-step autonomous bots) have gained traction, often built by chaining together open models from HF and other libraries. For example, NVIDIA’s new Nemotron Nano models (hosted on HF) are explicitly optimized for on-device reasoning, “making on-device and near-edge AI assistants practical for real-time decision-making”. These tiny LLMs demonstrate how open models on Hugging Face now drive next-generation applications in customer support, IoT devices and smart automation.

Robotics is another frontier where HF is quietly influential. In mid-2025 Hugging Face unveiled the Reachy Mini – an 11‑inch desktop humanoid robot kit priced at $299. This bold move (following HF’s acquisition of Pollen Robotics) was aimed at developers: Reachy Mini ships with native Hugging Face support, so programmers can hook it up to thousands of open AI models via Spaces. As HF’s CEO put it, the goal is to “become the desktop, open-source robot for AI builders.” Because Reachy’s design and software are fully open-source, HF also frames this as a response to privacy and monopoly concerns in robotics. By embedding HF’s cloud-connected hub into a physical robot, Hugging Face is literally giving AI embodiment to its open ecosystem.

Edge and on-device inference are also growing HF domains. The platform now lists numerous mobile-optimized models (like quantized LLaMA variants) and tutorials for running HF models in phones and browsers. NVIDIA’s Nemotron portfolio again illustrates this: the Nano family runs efficiently on small GPUs and even phones, enabling edge AI agents. More broadly, the spike in tiny-model research (FP4 quantization, new architectures) has been pushed through HF’s open channels. In short, Hugging Face is spreading AI from the cloud to the edge: from laptops to datacenters, to pocket devices and robots, the same open-model pipeline flows through its platform.

Open-Source vs. Closed Ecosystems: The Tension

A clear tension has emerged between the closed, proprietary AI offerings and the open ecosystem that HF champions. On one side, companies like OpenAI, Google and Anthropic maintain exclusive models (GPT-4.x, Gemini, Claude) often only via API. On the other, Hugging Face fosters an open economy of models. Industry analysts note that even as closed models push the cutting edge, open-weight models are rapidly closing the performance gap. The 2025 AI Index Report found that open-source LLMs narrowed benchmark differences with closed models down to ~1–2%, slashing inference costs by hundreds-fold.

Recent events illustrate this clash. In late 2025 OpenAI surprised the community by releasing open versions of its GPT models on HF (the “GPT‑OSS” line), implicitly acknowledging the momentum of open weights. Meanwhile, Chinese teams (DeepSeek, Qwen) have challenged Western closed leaders by open-sourcing powerful models that can be run anywhere. As one Red Hat report summarized: once the proprietary giants (Gemini, ChatGPT, Claude) are set aside, “we’re left with a few contenders” – DeepSeek, Qwen, etc. – “who know open source.”.

Hugging Face sits at the center of this dynamic. Its community and tools reinforce openness even in closed-company contexts. For example, the HF enterprise platform allows businesses to deploy open models securely on private clouds, offering an alternative to cloud-only APIs. The Dell-HF partnership explicitly frames itself as a challenge to “an industry often dominated by closed, proprietary models,” heralding “a move toward open-source AI technologies” that it calls revolutionary. In other words, HF’s direction suggests that future AI development may hinge on open collaboration and transparency – even if closed models retain headline attention.

Looking Ahead: Hugging Face’s Future Role

As we enter 2026, Hugging Face’s trajectory points toward continued growth as the silent engine of AI innovation. Its model hub and tooling have become essential to developers, much like how GitHub underpins software coding. We can expect HF to deepen its cloud integrations (including AWS’s HUGS offering and other partnerships) and expand enterprise features (security scanning, compliance tools, etc.). The Reachy Mini robotics project indicates HF is not afraid to pioneer new areas by fusing hardware with open AI.

Crucially, HF’s open ethos may shape the broader AI phase ahead. As regulators and institutions press for transparency, Hugging Face is already delivering a form of “open governance” to AI — every model on its platform can be inspected, forked or improved by anyone. Its collaborative ecosystem means breakthroughs (like faster transformer kernels, multilingual models or efficient quantization) propagate quickly through shared libraries and Spaces.

In sum, Hugging Face has become a quiet backbone of modern AI: not flashy, but deeply embedded in how AI systems are built, run and governed. Its story over the past 18 months underscores a shift in the industry toward democratized tools and infrastructure. If that shift continues, Hugging Face’s strategic importance will only grow. By anchoring open models, decentralized development and cross-platform deployment, Hugging Face looks poised to remain a pivotal enabler of the next phase of AI.

Internal source: https://huggingface.co/blog

0 Comments