The Foundational Flaw in the AI Gold Rush

The adoption of artificial intelligence in the business world is no longer a forecast; it’s a reality unfolding at a dizzying pace. A recent landmark report from McKinsey, ‘The State of AI’, reveals that a staggering 78% of organizations now use AI in at least one business function, a significant jump from 55% just a year prior. This isn’t just about experimentation; it’s about tangible results. PwC reports that nearly two-thirds of companies adopting agentic AI—systems capable of autonomous action—are already seeing marked increases in productivity.

This rapid integration, however, has exposed a critical vulnerability. Most of this AI development is happening on infrastructure designed for a different era. The dominant paradigm remains the centralized cloud: massive, remote data centers that, while simplifying initial setup, introduce a host of problems that are becoming untenable for the real-time, context-aware applications of tomorrow.

Think of it as trying to build a global network of interconnected smart cities using the Roman aqueduct system. It was a marvel for its time, but it’s fundamentally unsuited for the dynamic, high-velocity demands of the modern world. Centralized clouds create inherent latency as data must travel hundreds or thousands of miles for processing. They foster vendor lock-in, stifling innovation and creating strategic risks. And they represent single points of failure; an outage at a major cloud provider can bring countless businesses to a screeching halt. For applications in finance, healthcare, or autonomous vehicles, where millisecond delays can have catastrophic consequences, this architectural model is not just inefficient—it’s dangerous.

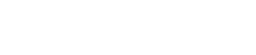

Enter the Digital Nervous System: Deconstructing Model Context Protocol

Out of this necessity, a new architectural philosophy is emerging, and its most potent implementation is the Model Context Protocol (MCP). While not yet a household name, MCP is the foundational layer that many experts believe will underpin the next decade of digital innovation. It is not an application or a platform, but something more elemental: the connective tissue, or digital nervous system, that allows intelligent workloads to run seamlessly and contextually across any environment—be it a massive cloud server, a local on-premises machine, or a tiny sensor at the network’s edge.

So, what makes it different? Where traditional server architecture is siloed and static, MCP is built on the principles of composability and context awareness.

The Power of Composable, Context-Aware Infrastructure

Imagine building a complex structure with custom-welded steel beams. If you need to change the design, you have to tear it all down. This is the old way. MCP, by contrast, is like building with a highly advanced set of LEGO bricks. Its servers are “composable,” meaning they can dynamically assemble and reassemble the resources they need—computing power, memory, data access—based on the specific demands of an application at that exact moment.

This dynamic allocation is guided by context. An MCP server understands the workload it’s running, the data it needs, the network conditions, and the user’s intent. This allows it to adapt in real time, connecting to multiple data sources and networks fluidly. This is the key to delivering rich, intelligent experiences that feel instantaneous and are deeply integrated into the environment, a stark contrast to the often-clumsy and delayed responses of systems tethered to a distant cloud.

Under the Hood: The Engineering of Resilience

To achieve this fluidity, MCP employs an elegant and extensible communication stack. At its core, it’s designed for speed, resilience, and interoperability, ready for the rigors of production-grade AI, even in highly regulated sectors.

An Interoperable Language for Machines

All messaging within an MCP environment runs on JSON-RPC 2.0. While that may sound technical, the concept is simple. It provides a lightweight, universally understood data exchange format. It’s like establishing Esperanto as the official language for all your AI components, ensuring they can communicate flawlessly without the need for clunky, performance-draining translators. This allows for asynchronous request-response tasks (one component can ask another for information and continue its work without waiting for an immediate reply), one-way notifications, and graceful shutdowns, all with robust error handling baked in from the start.

A Flexible Transport System

This communication happens over a dual-layer transport system. For local communication within the same machine, it uses the hyper-efficient Stdio (standard input/output). For remote communication, it leverages the power of HTTP+SSE (Server-Sent Events), a modern protocol perfect for pushing real-time updates from a server to a client. This hybrid approach ensures maximum performance whether an AI workload is communicating with a component inches away on the same chip or miles away in a different data center.

A New Partnership: The Developer and the Context-Aware AI

Perhaps the most immediate and profound impact of MCP is being felt in software development. By creating a direct, context-rich link between AI models and the tools developers use every day, MCP is transforming the very nature of coding from a solitary human endeavor into a powerful human-machine collaboration.

The ‘Git Bridge’: An AI That Truly Understands Your Code

One of the most powerful applications of MCP is the creation of a “Git bridge.” Teams are embedding MCP servers directly into their internal Git environments—the version control systems that house their entire codebase. This gives an AI model direct, real-time context of the project’s entire history, architecture, dependencies, and business logic, all without the need for costly and time-consuming retraining or fine-tuning.

The result is a paradigm shift. Instead of a developer feeding isolated snippets of code to a generic AI assistant and hoping for a useful suggestion, they now have a partner that understands the entire system. “It’s the difference between asking a stranger for directions and asking someone who has lived in the city their whole life,” explains Dr. Anya Sharma, a leading distributed systems analyst. “The context-aware AI doesn’t just see the single line of code; it sees the entire architectural blueprint. It understands the potential ripple effects of a change, suggesting refactors that are not only syntactically correct but architecturally sound.”

From Simple Code Generation to Deep Architectural Insight

This deep understanding elevates the AI from a simple code generator to a true architectural consultant. It can scaffold new services that perfectly match existing patterns, generate comprehensive tests that cover subtle edge cases, and even translate complex logic between different programming languages while preserving the original intent.

Accelerating the Pace of Innovation

This symbiotic relationship removes immense friction from the development cycle. Engineers are freed from mundane, repetitive tasks and can focus on higher-level problem-solving and creative design. The AI handles the intricate, detail-oriented work, dramatically speeding up iteration cycles. As software platforms continue to scale in complexity, this kind of AI-native infrastructure becomes less of a luxury and more of a necessity for maintaining both velocity and quality.

The Brains of the Operation: MCP in Real-Time Decision Making

Beyond the world of software development, MCP is emerging as a new architectural primitive for any system that requires intelligent, real-time decision-making. Its ability to process vast amounts of contextual data from diverse sources and act on it instantly is unlocking capabilities that were previously the stuff of science fiction.

Consider a sophisticated compliance framework for a global financial institution. Regulations are constantly shifting across different jurisdictions. A traditional, hard-coded system would require constant manual updates and be perpetually out of date. An MCP-driven system, however, can ingest regulatory feeds in real time, understand the context of a specific transaction (its origin, destination, and parties involved), and apply the correct, up-to-the-minute rules dynamically.

The same principle applies to fraud detection. Adversaries are constantly evolving their tactics. An MCP-based fraud model can evolve right alongside them, identifying new patterns of attack as they emerge and adapting its defenses on the fly. In payment routing, it can analyze live network fees, congestion, and jurisdictional rules to intelligently route transactions through the most efficient and cost-effective path, saving millions in operational costs. MCP doesn’t just execute code; it orchestrates intelligent systems that learn and adapt at the speed of change.

Breaking Free from the Cloud Monolith

For years, the technology industry has been on a relentless march toward cloud centralization. MCP represents a powerful counter-movement: the decoupling of computing from specific locations or providers. By creating this fluid, interoperable layer, MCP frees workloads from the bottlenecks of the centralized cloud.

This freedom is not merely a technical detail; it is a profound strategic advantage.

Building Antifragile Systems for a Volatile World

The engineer and author Nassim Nicholas Taleb coined the term “antifragile” to describe systems that don’t just resist stress but actually get stronger from it. This is the ultimate promise of MCP’s decoupled architecture. A traditional, cloud-dependent company is fragile; if their single provider has a regional outage, their service goes down. An MCP-powered infrastructure is antifragile. If a region or provider fails, workloads can automatically and seamlessly migrate to another available resource, whether in a different cloud, an on-premises server, or an edge location. The system not only survives the shock but also learns from it, potentially re-routing traffic to avoid similar dependencies in the future. Instead of brittle, monolithic stacks, MCP enables the creation of resilient, self-healing infrastructures.

The Dawn of True Digital Agility

In today’s hyper-competitive landscape, agility is a condition for survival. Decoupling compute makes that agility structural, not just aspirational. Companies are no longer constrained by the limitations of their infrastructure vendor. They can launch new services in new markets instantly, leveraging local computing resources to comply with data sovereignty laws and slash latency for users. They can experiment with new AI models and platforms without fear of being locked into a single ecosystem. With MCP, infrastructure transforms from a bottleneck and a cost center into a formidable competitive asset.

The Protocol in Action: Where MCP is Already Making Waves

While MCP as a holistic concept is still gaining traction, its core principles are already powering breakthroughs across the AI landscape. We see its spirit in frameworks like:

DeepSpeed, which accelerates the training of massive, distributed Large Language Models by intelligently partitioning the workload across multiple GPUs.

TensorFlow Federated, which enables decentralized machine learning on distributed data, a key component for privacy-preserving AI.

PyTorch on Kubernetes, a combination that allows AI workloads to be scaled elastically and dynamically based on demand, a core tenet of composable infrastructure.

ONNX Runtime, which optimizes the inference of AI models across a vast array of different hardware, embodying the principle of decoupling the model from the underlying compute.

Digital Twins in smart factories, which use real-time, context-aware data from thousands of sensors to create a living virtual model of the entire production line for predictive maintenance and optimization.

These technologies, and many others like them, are the harbingers of a new era. As artificial intelligence, decentralized systems like blockchain, and adaptive infrastructure continue to converge, MCP or protocols built on its principles will become the undisputed digital backbone. They will provide the low-latency, high-throughput, context-aware computing that the next generation of intelligent, autonomous, and distributed systems demands. For the architects of our digital future, MCP isn’t just another tool; it’s the cornerstone of a new, more resilient, and more intelligent world.

Source: https://www.techradar.com

0 Comments