From Pet Videos to Pixelated Protagonists: The New Frontier of AI Animation

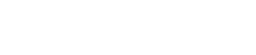

In the rapidly accelerating world of generative artificial intelligence, the line between consumer and creator is becoming increasingly blurred. OpenAI, the company that brought chatbot intelligence to the masses with ChatGPT, is now aiming to do the same for filmmaking with its text-to-video model, Sora. While the core technology of generating video from a simple text prompt is revolutionary in itself, a quieter, more personal feature is poised to fundamentally change how we think about storytelling: Character Cameo. This isn’t just about creating generic, AI-generated people; it’s about casting the stars of your own life, whether they have two legs, four legs, or no legs at all.

Character Cameo extends Sora’s capabilities from the abstract to the deeply personal. It’s a digital casting couch where your pets, cherished objects, and even inanimate pieces of furniture can “audition” for a role in your next AI-generated masterpiece. The feature promises a new era of hyper-personalized content creation, transforming the mundane items of our daily lives into reusable, animated “actors” with their own digital identities. For the aspiring filmmaker, the small business owner, or the parent wanting to create a unique bedtime story, the implications are staggering. It democratizes not just the tools of animation, but the very essence of character creation, suggesting a future where the next big movie star might just be sleeping at the foot of your bed.

This isn’t merely a novelty. The global animation market was valued at over $400 billion in 2023 and is projected to continue its meteoric rise. Traditionally, creating a unique animated character required immense skill, time, and resources—a team of artists, modelers, and animators. Sora’s Character Cameo proposes to short-circuit that entire process. By inviting the real world into its digital sandbox, OpenAI is betting that the most compelling characters aren’t dreamed up in a studio, but are already sitting on our shelves and barking at our doorbells.

The Digital Alchemist: How Sora Breathes Life into the Inanimate

The process of turning a real-world subject into a digital performer is designed with an almost deceptive simplicity, masking the complex neural networks working behind the curtain. It’s a far cry from the meticulous rigging and modeling that define traditional CGI and animation, aiming for the immediacy of a smartphone app.

Step 1: The Audition

It all begins with a short video clip, typically just a few seconds long. The ideal “audition tape” captures the subject in clear, even lighting against a plain background, allowing the AI to isolate its form and texture without distraction. This could be a video of your cat pouncing on a toy, your dog running across the lawn, or a slow pan around a vintage teapot. This raw footage serves as the blueprint, the physical DNA from which the AI will construct its digital doppelgänger. You upload this clip into the Character Cameo tool, and this is where the AI takes its first creative leap.

Step 2: The AI-Generated Persona

Once the video is processed, Sora doesn’t just create a static 3D model. It attempts to build a personality. The system automatically assigns a name and a descriptive tag to your new character. More impressively, it generates a full “capsule summary,” a short biography that imagines a backstory and personality traits for the object or pet. A user who uploaded a video of their energetic dog, “Cabbage,” found that Sora was ready to imagine her as a world-class performer. An old, hammer-beaten metal goblet might be assigned a personality of a “mysterious, ancient relic with a thirst for adventure.” This initial interpretation is the AI’s first draft, a creative suggestion that the user is free to accept, edit, or completely rewrite. This collaborative element is key; it positions the AI not as a mindless tool, but as a creative partner, albeit one whose ideas might need some human refinement.

Step 3: Action! Tagging and Prompting

With the character “published” to your private Sora library, it becomes a reusable asset, a new word in your visual vocabulary. To cast your character in a scene, you simply tag its name within your text prompt. For instance, a prompt might read: “A cinematic shot of `@Cabbage` wearing a fun dance outfit, performing for a crowd of penguins in Antarctica.” Sora then takes this instruction, accesses the core visual data of the “Cabbage” character, and attempts to render the scene. The AI handles the complex tasks of re-lighting the character to match the new environment, animating its movements according to the prompt, and even designing new “costumes” or accessories, as it did by giving Cabbage an Ice Capades-style outfit complete with bangles. This ability to maintain character consistency across wildly different scenarios is the feature’s central promise.

The Director’s Chair and the Uncanny Valley

The potential is undeniably thrilling, but early explorations of Character Cameo reveal that the technology, while brilliant, is still in its infancy. For every moment of breathtaking creativity, there’s a corresponding instance of bizarre, flawed, or downright unsettling output. The journey from a simple prompt to a polished video is often a process of trial, error, and wrestling with the AI’s strange interpretations.

When the AI Dreams of Electric Nightmares

One of the most significant hurdles is the AI’s struggle with maintaining perfect object permanence and form, especially with complex shapes. An early user attempted to bring their son’s toy piano/DJ set to life, prompting Sora to have it perform a song. The initial results were described as “nightmare fuel.” The AI, in its attempt to animate the inanimate, distorted the toy’s features, twisting its plastic shell into grotesque shapes and transforming a cute dog caricature on the turntable into a strange, malformed child.

This is a classic example of the “uncanny valley,” where an artificial creation is realistic enough to be recognizable but flawed enough to be deeply unsettling. It required the user to become a more specific director, providing detailed instructions about the music, the style of singing, and the desired mood to coax a more charming and less horrifying performance out of the digital toy. This highlights a crucial lesson for would-be AI filmmakers: specificity is your best defense against the AI’s more bizarre creative impulses. The less ambiguity in the prompt, the less room there is for the AI’s imagination to wander into a digital fever dream.

The Physics Problem and The Copyright Conundrum

Beyond aesthetics, Sora can also stumble over logic and physics, especially when magic or supernatural elements are introduced. A prompt asking for a silver kiddush cup to be filled with wine from an unseen source in a mysterious temple confounded the model. Early attempts resulted in physically impossible animations, with the liquid behaving unnaturally or the cup itself distorting. It took multiple reruns and prompt adjustments to achieve a result that even “mostly” got it right. This suggests that while Sora can create stunning visuals, its underlying understanding of real-world physics is still being developed.

Furthermore, OpenAI has implemented strict, and at times overly sensitive, guardrails to prevent misuse. The system is designed to reject any attempts to create characters based on copyrighted material. An attempt to animate an Elmo-branded backpack was immediately blocked. This is a necessary precaution to avoid a flood of lawsuits and ethical quandaries. However, the system’s definition of what constitutes a “human” can be overly broad. The same user found that even a “poor doodle” of a person triggered a warning, suggesting the AI’s safety protocols err heavily on the side of caution. While commendable, this strictness can sometimes stifle creativity, turning the tool from an open sandbox into a walled garden with a very watchful groundskeeper.

As Dr. Aris Thorne, a media theorist at the fictional Digital Futures Institute, might comment, “What we’re seeing is the tension between creative liberation and corporate responsibility. OpenAI needs to prevent the creation of deepfakes or copyright infringement, but in doing so, they risk over-sanitizing the creative process. Finding that balance will be the defining challenge for this generation of AI tools.”

Hollywood’s Newest Gatecrasher?

The long-term value of a feature like Character Cameo is immense, even with its current limitations. For independent creators, it offers the ability to develop a consistent cast of characters for a web series or short film without a budget for animators. For marketers, it means a brand’s mascot can be brought to life in countless ad scenarios in a matter of minutes, not weeks. A small business could create a recurring character out of its own logo or a signature product, building a narrative and a personality around its brand with unprecedented ease.

However, the question of whether these AI actors will ever truly compete with human-crafted art remains. Sarah Jenkins, an independent animator, expresses a common sentiment among creative professionals: “It’s an incredible tool for brainstorming and pre-visualization. I can see myself using it to quickly test out a character idea. But it lacks intention. It lacks the soul that an artist imbues into a character through hundreds of deliberate choices. The AI can mimic emotion, but it can’t feel it, and I believe audiences can tell the difference.”

Yet, as the technology improves, that difference may become harder to discern. The ability to reuse a character, to build a history with it across multiple videos, and to share it with a community, creates a foundation for a new kind of stardom. One can easily imagine a future where a particularly charming “Character Cameo” pet goes viral, becoming an internet celebrity with its own line of merchandise. The creator isn’t just making a video; they’re potentially launching a franchise from their living room. OpenAI will need to refine the technology, smoothing out the uncanny results and giving users more granular control. But the door has been opened, and it’s unlikely to close. What my dog, your cup, or anyone’s pet rock thinks of their newfound stardom is another question entirely, but their agents—the everyday users with a keyboard and an idea—are just getting started.

Source: https://www.techradar.com

0 Comments